Perfect Prevention vs. Perfect Detection

I think a lot about the qualities that make good blocking software. By blocking software, I mean software a user can run that restricts their own access to apps/websites. One property of blocking software that has particularly interesting qualities is strictness. Let’s define strictness as the effort required to bypass a blocking rule a user has created for themselves.

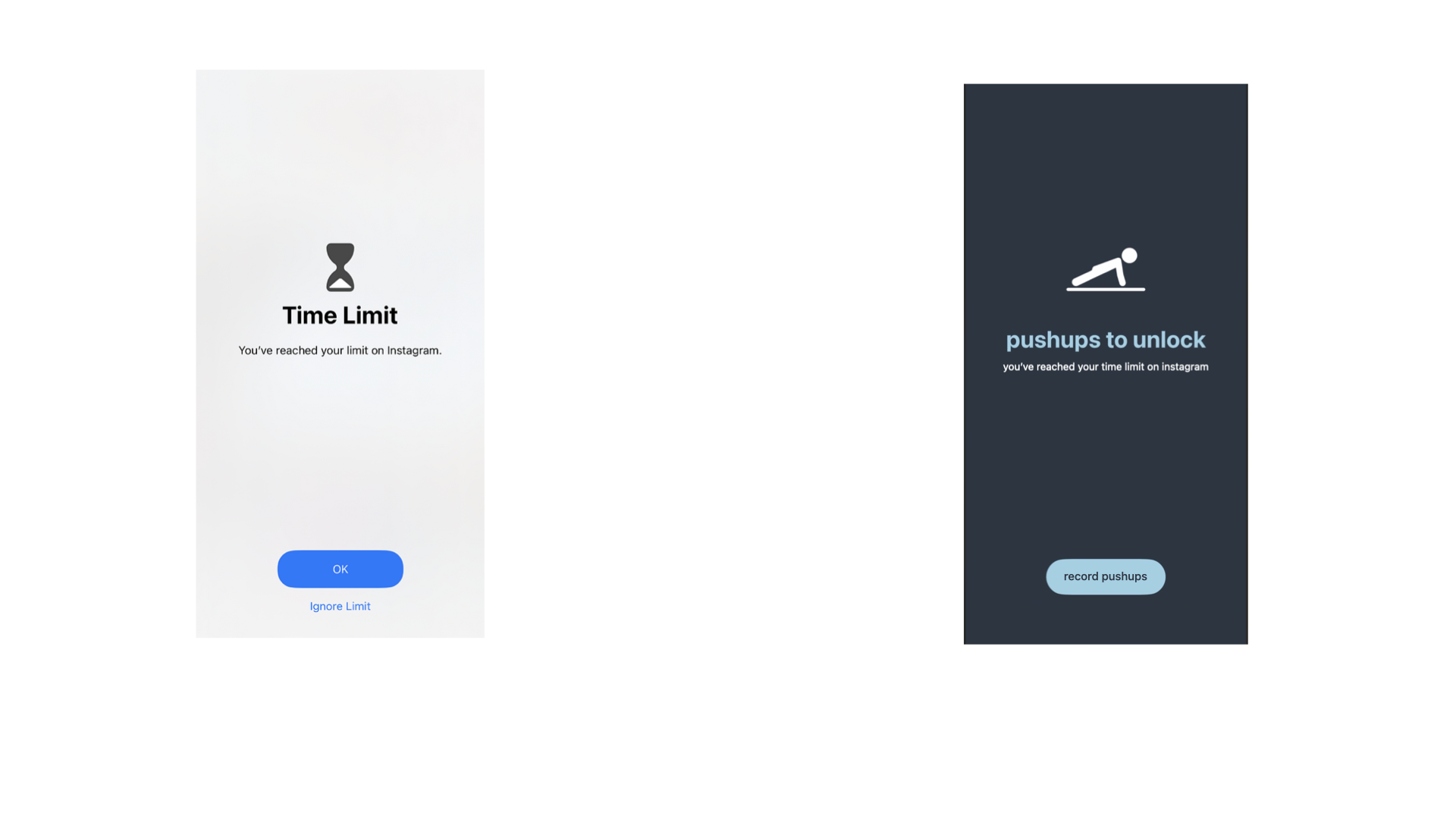

Consider the spectrum of strictness – on the low end, Apple’s ScreenTime exemplifies a comically low hurdle to bypassing self-enforced constraints. One button press and you’re back to whatever presumably counterproductive activity you were doing before you used up your budget.

On the high end, Clearspace has a mode that will require 10 pushups for every minute on restricted apps. It would take a colossal amount of physical effort to waste any meaningful amount of time in this mode. Now here’s the interesting part - users can always uninstall blocking software. So if a user is in a truly self-destructive state, the strictness of the system becomes the amount of effort required to delete the blocking software.

Now here’s the interesting part - users can always uninstall blocking software. So if a user is in a truly self-destructive state, the strictness of the system becomes the amount of effort required to delete the blocking software.

In other words, there is an environmentally imposed ceiling on strictness equal to the effort required to delete blocking software. You can try to make it harder to delete your software, and many have – but it is always possible. And depending on the platform, deletion might be trivially easy (iOS, for example).

This is a real shame for people that want no loopholes. Their devices absolutely will not let them have it. To make this concrete - I'll confess something that is revealing of my willpower – if I could push a button and make it completely impossible to use Youtube for more than 20 minutes per day, I’d do it.

Inescapably, I can’t do that – but all is not lost. Blocking software’s fundamental problem is that the adversary always has permission to disable it. This problem isn’t novel – and analogous ones have elegant solutions. The following might ring a bell:

“Federal law prohibits smoking on all flights, including the use of e-cigarettes. Tampering with, disabling, or destroying the smoke detectors in the lavatories is strictly prohibited.”

The airplane crew wants to prevent smoking, but they can't physically stop it – particularly in the bathroom. So they invest in detection (the smoke detector) and tamper-proofing the detection mechanism.

To extend the analogy, blocking software could certainly detect uninstallation (server expects a ping from the client at routine intervals, for example). If it doesn't get it the ping, server assumes deletion. But that doesn't do anything to stop it.

“... destroying the smoke detectors in the lavatories is strictly prohibited. This is a violation of FAA regulations and may result in severe penalties.”

But that does. The airline creates a retroactive deterrent and communicates it. In doing so, they prevent something they don’t have the ability to physically stop. This pattern is reasonably common. When a party can’t physically stop an adversary, they’ll invest in detection and deterrence instead.

So my dream of perfect Youtube strictness might have some life. We just need a deterrent. If I could run software that both

That would solve all my problems. It would also be - as far as I can tell - the strictest possible system I could impose upon myself. It's easy to imagine other useful deterrents that could be swapped in here - financial disincentives, for example.

But the critical point is that the effort of deleting the software is no longer just the effort associated with deleting the software. It's now includes the effort incurred by the deterrent (in my case, dispelling the wrath of my brother who knows my anti-youtube goal).

So the strictness of the entire system can actually exceed the initial threshold we bumped into - effort of deletion.

On the high end, Clearspace has a mode that will require 10 pushups for every minute on restricted apps. It would take a colossal amount of physical effort to waste any meaningful amount of time in this mode.

In other words, there is an environmentally imposed ceiling on strictness equal to the effort required to delete blocking software. You can try to make it harder to delete your software, and many have – but it is always possible. And depending on the platform, deletion might be trivially easy (iOS, for example).

This is a real shame for people that want no loopholes. Their devices absolutely will not let them have it. To make this concrete - I'll confess something that is revealing of my willpower – if I could push a button and make it completely impossible to use Youtube for more than 20 minutes per day, I’d do it.

Analogous problems

Inescapably, I can’t do that – but all is not lost. Blocking software’s fundamental problem is that the adversary always has permission to disable it. This problem isn’t novel – and analogous ones have elegant solutions. The following might ring a bell:

“Federal law prohibits smoking on all flights, including the use of e-cigarettes. Tampering with, disabling, or destroying the smoke detectors in the lavatories is strictly prohibited.”

The airplane crew wants to prevent smoking, but they can't physically stop it – particularly in the bathroom. So they invest in detection (the smoke detector) and tamper-proofing the detection mechanism.

To extend the analogy, blocking software could certainly detect uninstallation (server expects a ping from the client at routine intervals, for example). If it doesn't get it the ping, server assumes deletion. But that doesn't do anything to stop it.

“... destroying the smoke detectors in the lavatories is strictly prohibited. This is a violation of FAA regulations and may result in severe penalties.”

But that does. The airline creates a retroactive deterrent and communicates it. In doing so, they prevent something they don’t have the ability to physically stop. This pattern is reasonably common. When a party can’t physically stop an adversary, they’ll invest in detection and deterrence instead.

- Problem: I don’t want any router between my laptop and Google’s server to modify the file I’m uploading to Drive, but I can’t physically prevent it.

Solution: data integrity checks happen at all levels of the network stack (detection) and bad actors are cut out of the routing path if validation fails (deterrent)

Problem: The MLB doesn’t want its players using steroids, but it can’t physically stop them

Solution: Drug tests are issued randomly (detection), and players face 80 game suspension on first offense if the test is positive or (deterrent).

Exceeding the strictness ceiling

So my dream of perfect Youtube strictness might have some life. We just need a deterrent. If I could run software that both

- Restricts Youtube to 20 minutes a day

- Automatically reports to my brother if I delete it

That would solve all my problems. It would also be - as far as I can tell - the strictest possible system I could impose upon myself. It's easy to imagine other useful deterrents that could be swapped in here - financial disincentives, for example.

But the critical point is that the effort of deleting the software is no longer just the effort associated with deleting the software. It's now includes the effort incurred by the deterrent (in my case, dispelling the wrath of my brother who knows my anti-youtube goal).

So the strictness of the entire system can actually exceed the initial threshold we bumped into - effort of deletion.

Edit: Sept, 2024

I wrote this 3 months ago and since then I've been spotting prevention vs. detection problems everywhere I look. The tamper seal on drink bottles, traffic cameras at intersections, college plagarism rules - each bear the signature of "detect and deter" strategies. It's unsurprising that each points to a problem where prevention is unreasonable or impossible.

Also since writing this, our thinking at Clearspace has been significantly informed by it. Strictness certainly isn't the ony thing to optimize for, but it became increasingly clear that allowing users to close all loopholes if they wanted to was the next most important thing to build.

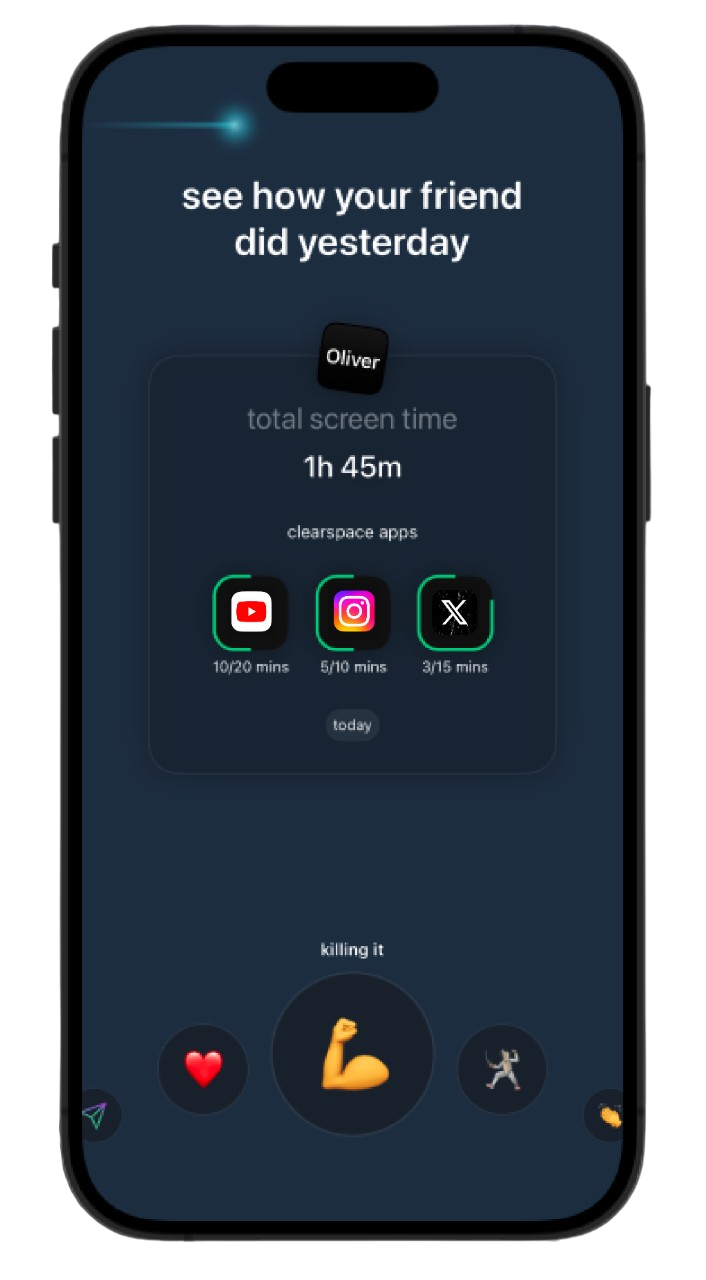

To that end, we've added robust social accountability. Thousands of users are now sharing their screen time with a friend who gets notified if they to delete it. For me personally, this change has been the only perfectly effective strategy I've ever tried for enforcing a blocking protocol in my life.

what my brother sees each day - my usage of youtube, twitter, and instagram and my budget

what my brother sees each day - my usage of youtube, twitter, and instagram and my budget what he'd see if I deleted Clearspace

what he'd see if I deleted Clearspace